The graduate student received the award for research on improving the efficiency and scalability of datacenter infrastructure for large language model training and inference.

(text and background only visible when logged in)

(text and background only visible when logged in)

Georgia Tech School of Electrical and Computer Engineering (ECE) graduate research assistant Irene Wang was named as one of the 10 recipients of the NVIDIA Graduate Research Fellowship.

The fellowship is awarded to students doing outstanding work relevant to NVIDIA technologies.

Wang’s research addresses the critical energy and scalability bottlenecks of large language model (LLM) training and inference.

In recent years, machine learning has undergone a rapid transformation driven by the rise of LLMs such as GPT and Llama.

“This shift is fundamentally reshaping the design of artificial intelligence (AI) supercomputers,” Wang said. “As models grow beyond the computational and memory capabilities of a single accelerator, a distributed approach becomes necessary to execute these models.

Because of this constant movement of data, performance depends on more than just the hardware speed.

"The network topology and how the devices are connected to each other greatly affects the performance and efficiency of the deep learning execution,” Wang said.

Modern training and inference workloads for these new systems now span thousands of accelerators, simultaneously stressing compute, memory, and interconnect resources. Current solutions often deal with these challenges using an isolated approach, attempting to fix or optimize each component separately.

Wang’s work challenges that paradigm, focusing on the co-optimization of accelerator architecture, network design, and distribution strategy.

By optimizing the hardware architecture and the network design at the same time, Wang’s research uncovers efficient designs that are impossible to find when looking at the components in isolation. This integrated approach aims to break through current limits on performance and energy usage.

By reducing the amount of energy needed and potentially lowering hardware demands, such as needing fewer chips to perform the same tasks, this work helps decrease operational and hardware costs, saving money for both companies and researchers.

“Ultimately, my research aims to shift AI infrastructure design away from raw performance alone toward sustainable, energy-efficient scalability,” Wang said. “By getting more processing power out of every watt of electricity, this work reduces the environmental cost of large-scale AI and lowers barriers to entry for advanced deep learning systems.”

Wang is a third-year computer science Ph.D. student advised by ECE Assistant Professor Divya Mahajan. She has been recognized as an MLCommons Rising Star. Prior Georgia Tech, she earned her bachelor’s degree in computer engineering from the University of British Columbia.

Related Content

Mahajan Receives Inaugural Google ML and Systems Junior Faculty Award

The award supports early-career researchers whose work advances efficient, scalable, secure, and trustworthy computing systems.

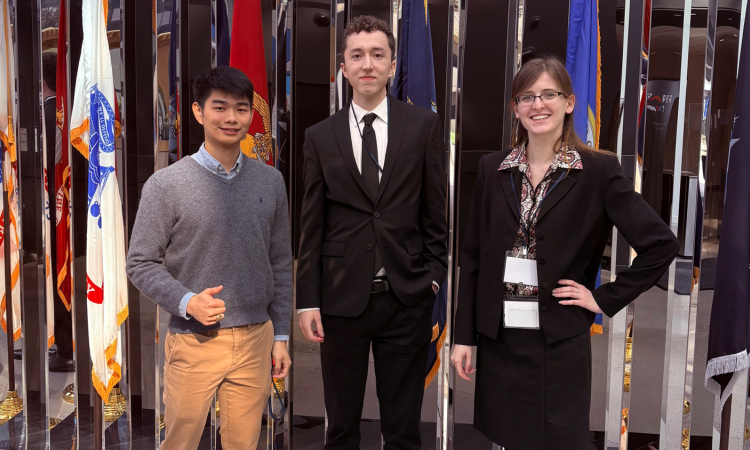

CoE Trio Share Work at Draper Research Symposium

Georgia Tech Draper Scholars Laurel Hilger, Gus Richter, and Kevin Zhang presented thesis research at a prominent national security symposium in Cambridge, Mass.